Proper Clustering Algorithm for Tweets

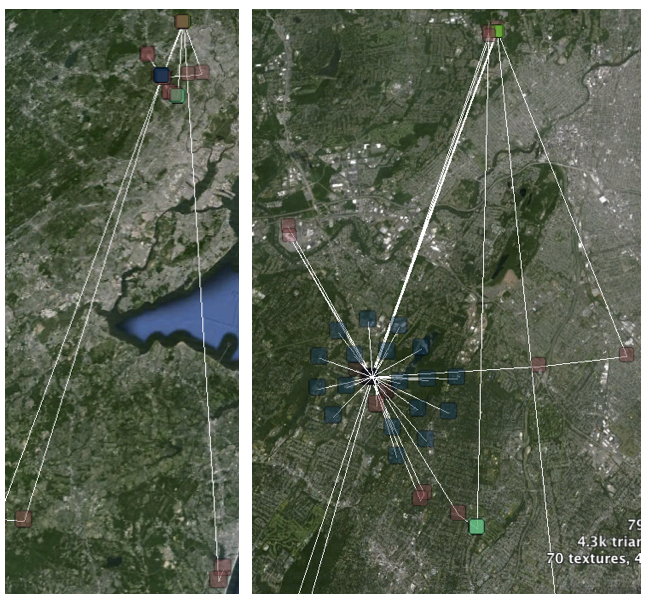

DB Scan

The previous k-means clustering algorithm failed at best identifying the dense clusters. Two possible reasons for this are:

- Needing to implicitly specify k

- Setting a maximum number of iterations for the algorithm to run.

Regardless, DBScan is much better suited for this process.

Reliability in relation to Time?

With new density calculations for the clusters, should the reliability of time over a series of days still be a concern?

Current Algorithm

- Determine # clusters and size of clusters with DBScan

- Determine the density of each cluster as: num_tweets2 / area of convex hull of cluster

-

Determine the regularity of tweets on a per 3-hour basis per cluster.

TODOHow does this metric get translated to a filtering parameter? How do we know if it’s good or not? How does one normalize this particular parameter?